I must admit that I find the reflective practices harder than most of the more 'traditional' things on the course. I think it's because it's less about something tangible, where you can chart your progress and see whether you've succeeded or failed. Having said all that, I like how the reflective practices build on each other, and get you to completely reassess how you write a blog entry. And of course, given what Rudaí 23 is all about, it makes you reassess how you look at developments in the library world. And when you look at it like that, the reflective practices

are something tangible. It's not just thinking about something, in fact, as mentioned in thing#17, "action without reflection leads to meaningless activism, while reflection without action means we are not bringing our awareness into the world".

A reflection on reflections

|

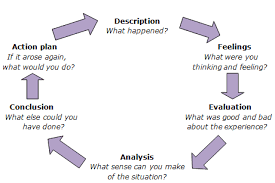

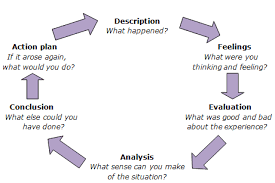

| Gibbs' Reflective Cycle, from cumbria.ac.uk |

I definitely think I could have used Gibbs' chart on some of my blog posts (for example,

thing#5 about social media). I went in to a lot of detail about my own experience of using social media, but failed to make the connection between that and how social media is used where I work (and also in the library world in general). What a thing to miss! It's not even that I missed that social media is huge in the library world (and even if I had, the rest of the Rudaí course would've been a real eye opener!) but it's pretty important where I work. Even a short paragraph about how we use social media to advertise our services to students and whether it works or not would have been a nice way to end the blog.

It's hard to look back over something you've written so long away (in many ways), but I think I was more concerned with completing the task rather than describing the process. I'm beginning to realise the importance of the process: how you approach a task, what preparations you make, and particularly how you apply that task to your career is very important.

Using reflective practice in your job

The task that Rudai has set for thing#17 is "write a blog entry describing how you could use reflective practice in your library experience". As I mentioned before, I like when tasks are concrete and apply to our real world experience, so I'm going to apply this to my job which is in a small academic library in Dublin.

I've worked on some exciting projects since I started in the library, but the one I'm going to apply what I'm learning about reflective practice is a user-testing project I did over the summer. This is because user-testing was something I had never done previously, and had never even (necessarily) associated it with a traditional library role. Either of those descriptions (and sometimes both) can usually be applied to any of the Rudaí 23 things.

Some background about the project

I was lucky in that my boss was very helpful and encouraging about it. She knew I had never done anything like it before, but was keen to give me (nearly) complete control of it. She suggested some reading that I could do to prepare myself for both the theory behind user-testing as well as how to plan and implement it. After doing some reading around the subject, I focused on one particular book, Steve Krug's Don't Make Me Think, which seemed to be geared towards exactly what we were looking for: a cheap model of user-testing that could be conducted with hardware and software that we already had access to. I had regular meetings with my boss about what she wanted from the project and who we wanted to participate.

After this, I needed to take care of some practical issues. I needed to contact various people in the college that might know of some students who would fit our parameters and then book a room in the library based on their availability. I quickly realised that I would have to be very flexible to accommodate a whole group of people who were essentially doing us a favour. This meant that the project would not be completed in the way that I had originally envisaged: students booked in at the beginning of a three-week period, with time in between to write up the reports. It would be messy, but it would be okay. Or at least, I hoped it would be.

What I did next and how I felt about the project

|

Steve Krug's book, which was

the key to a successful user-testing

project. |

After I had got all the practical issues out of the way, I followed the steps in Krug's book about how to prepare for the actual testing. Things flowed relatively smoothly from this point. In nearly all tests I discovered something that would be useful for our new website, and, such is the nature of user-testing, even when you think you're not getting anything from a particular test, the very fact that you're 'getting nothing' is actually telling you something (some users will find the whole process very easy and straightforward). I was beginning to feel more and more confident about the project at this stage, as I could see real, verifiable results, and I was already seeing basic things about navigation of the website that were important issues that needed to be addressed.

Throughout the whole process, my main thought was reminding myself that preparation was the key to it succeeding or failing. I needed to remember the theory, to have everything booked in advance, and to have my generic script altered prior to each individual test. I also needed to set aside time to prepare for the test before it occurred, as well as keeping numerous documents updated about participation, notes, etc.

Evaluating the project

I found the whole project a great learning experience. It definitely challenged my preconceptions about what librarians did and I felt that I was doing something that was important. I could also see how it fit in with the many changes that the library was going through over the summer (we were getting a new website, new libguides, a new LMS). It was also frustrating in parts, but I think any project - especially one that you haven't done before - is going to have elements of that. Another thing that I learned was always go with your gut (in this case, by 'gut' I mean Krug's book). He kept saying that anything over approximately five users meant you were just increasing your workload with no visible impact on the overall test, but we had agreed that we wanted to test as many users as possible, and specifically students in different stages of their academic career as well as international students. After approximately five user-tests, I could see that any issues that students might have with the system were starting to repeat themselves, regardless of their status. Despite this, I think it was good to see in practice what Krug was trying to get at, and when we next do some user-testing, we can avoid this.

Analysis

The most important aspect of the project was that it achieved its main aim: identifying possible issues with navigation around our new website. It was also really interesting to see first-hand how students did this, as well as their reactions to some of our new services that we were offering. On a personal level, I realised that I could manage every detail of a project: planning, implementation, and successful delivery. The project was well-received, both in the library and in the college in general, and it was great to see how it would benefit all the changes that the library were in the process of introducing.

Conclusion

After the project had finished, I used my reports to write up a basic document about what issues the testing had focused on. Those deemed urgent were acted on immediately, while other issues were set aside (either for looking at in the future or because they weren't high up on the list of priorities). This is, of course, an important part of user-testing, and while it seems self-evident (what's the point in user-testing if you're not going to act on the recommendations?) it is repeated in most of the material I read about user-testing, so it seems like it is something that keeps coming up.

I think that for any future user-testing that I do, I will try and follow the theory more, specifically with regards to how many people we use in our testing, as well as trying not to cover too much in the tests. Also, despite my obsessing over keeping notes, I would like to keep more time for this, as well as keeping screenshots to give a better overview of how the project progressed. Using the tips from thing#17 will definitely help with this.

Hi Bryan

ReplyDeleteGreat post. Did you include the perceptions of the students in the report, and did they direct any modifications that you made to the system? I'm just being curious: did you interview students, or how did you gather data from them?

Wayne

The Rudai23 Team

Oh, yeah, I probably should've included that in my post! The general idea (or at least what I took from the literature about user-testing) was that you take note of what the users are doing (primarily) but also what they're saying about what they're doing (if you know what I mean).

ReplyDeleteIn the script I prepared before the tests, you repeat that you want the users to 'talk aloud' about what they're doing. All of the reports I did were about what they did and what they said they were doing. Any of the changes we made were based on either of these things (or both!)

I think it's a good idea to have this approach. If you were just focusing on what they were doing, you would be left with 'the user was hesitant about where to go to find the exam papers they were looking for' (for example) but because I was encouraging them to talk about it, we realised that it was because the way we were labelling the exam papers was confusing and non-intuitive.

Any of the 'interviewing' was done during the testing. I didn't talk to the students before (except to work out when would suit them for the test) or after.